For people lost in the backcountry, a quick rescue can be essential to survival.

But search and rescue is a complex operation that often relies on extensive manpower and costly helicopter flights. Remotely piloted aircraft mounted with detection systems are not only less expensive but fly closer to the ground and are therefore safer for searchers in poor weather conditions.

Dr. Michal Aibin, a faculty researcher in BCIT’s School of Computing and Academic Studies, is using his expertise in the optimization of computer networks to improve the real-time capacity of RPAS object detection systems to locate people on the ground.

“You Only Look Once”

Working with industry partners Spexi and InDro Robotics and peers at Northeastern University, Dr. Aibin is working on integrating image-processing artificial intelligence using an algorithm called YOLO—“You Only Look Once.” Since searches must be done quickly, real-time video-processing is vital. A system is considered real-time if the person can be detected within one second. Although YOLOv4 is very good at zeroing in on humans in all environmental conditions, it has a low frames-per-second processing rate. To compensate, Dr. Aibin’s team created a frame-skipping algorithm to significantly reduce the number of frames that YOLOv4 must process.

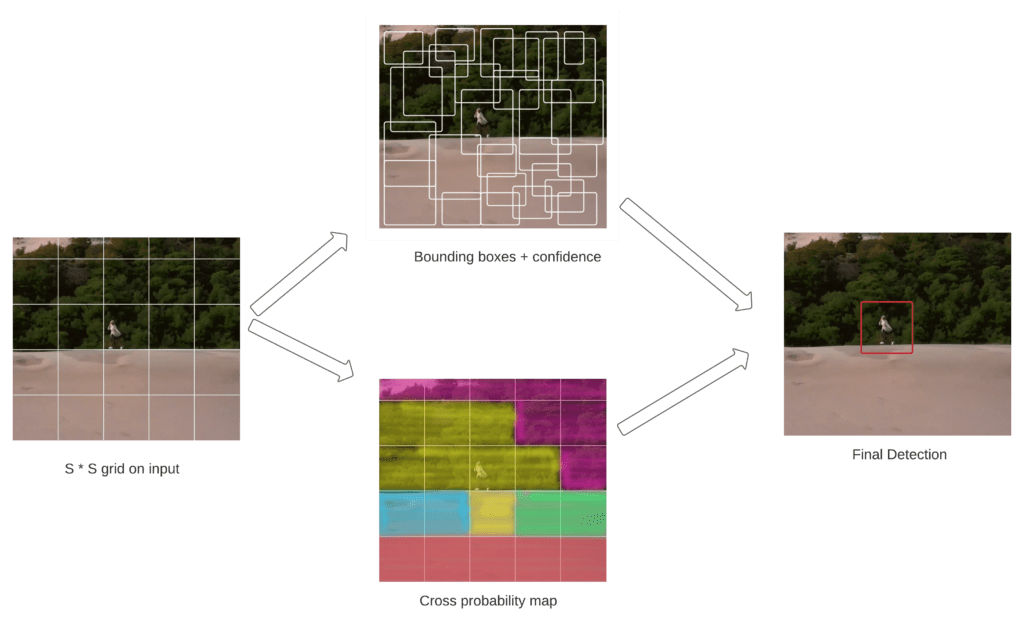

Depending on the obscurity of the search area, different frame-skipping settings can be selected. The live video feed collected by the RPAS is fed into this algorithm, and the remaining frames are then fed into the YOLOv4 algorithm, which creates bounding boxes and text in the video when humans are detected (see the following figure). The GPS in the RPAS can be used to notify rescue operations of sighting locations.

YOLOv4 Object Detection Configuration