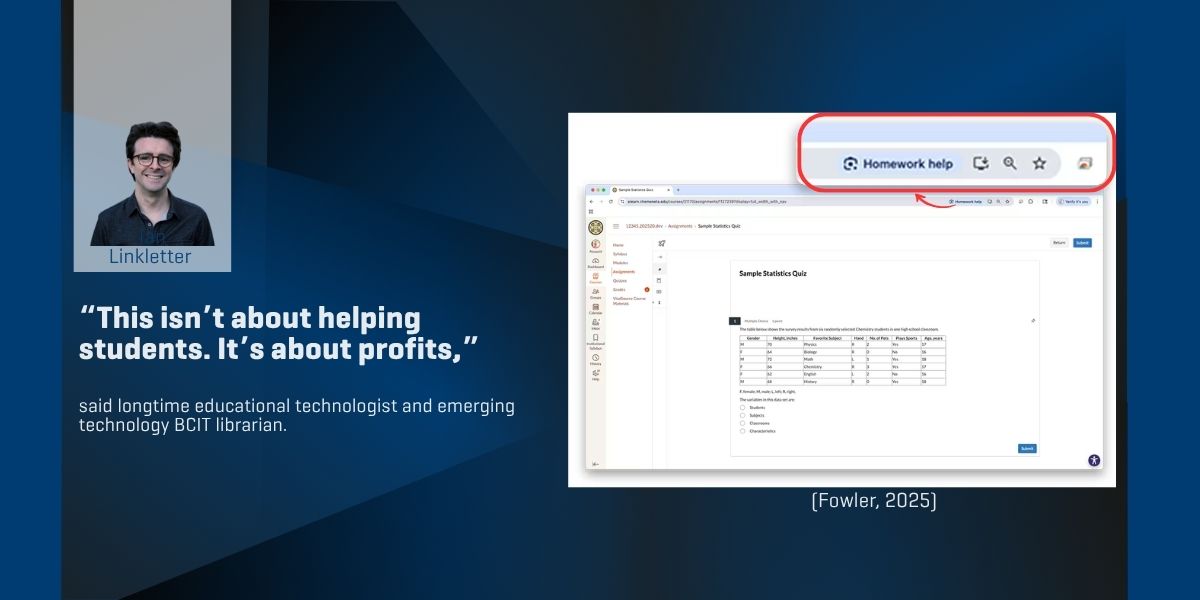

When Google quietly tested a “homework help” button inside its Chrome browser earlier this month, the company likely expected little more than quiet adoption. Instead, the experiment provoked outrage from many educators who saw it as a direct attack on academic integrity. The button appeared only on quiz pages inside learning management systems; a deliberate targeting that left librarians, faculty, and technologists stunned. For Ian Linkletter, an emerging technology librarian and longtime educational technologist at the British Columbia Institute of Technology (BCIT), the feature was a wake-up call.

“It wasn’t just a button,” he said. “It was Google inserting itself between faculty and students at the exact moment when trust and academic integrity matter most.”

How the alarm was raised

Ian first noticed discussion of the feature on September 2, when Chrome pushed the update. Forums and professional listservs buzzed, but no one seemed to be contacting Google directly. Drawing on more than a decade in educational technology, Ian decided to flag the issue publicly. The reaction from his professional network was swift. Librarians, instructors, and technologists voiced their support. However, “Some people say AI is inevitable and we should give up,” Ian reflected. “But we can’t. This is exactly the moment to fight for an ethical future.”

Why the button matters

Academic integrity, Ian argues, isn’t about surveillance or detection software but it’s about trust between teachers and students. By inserting an AI-powered shortcut directly into coursework, Google disrupted that relationship before classes even began. “Faculty hadn’t even had a chance to talk to students about AI, what’s appropriate, what’s ethical,” he explained. “Google made it about them from day one.”

AI’s deeper risks

The Chrome controversy is only one example of how artificial intelligence is reshaping education in ways that many see as harmful. For beginners, Ian warns, AI tools function as seductive shortcuts that bypass the learning process. More troubling is AI’s shaky relationship with truth. Large language models don’t reason; they predict the next likely word. That means misinformation, overconfidence, and even fabricated citations. “I’ve seen references where the journal exists, the year exists, the author exists but the article itself doesn’t. It was made up,” Ian said. To him, that poses a profound threat: a future where students learn from sources that never existed, and libraries struggle to separate real scholarship from machine-generated noise.

Surveillance isn’t the answer

If AI encourages shortcuts, some suggest countering with stricter monitoring: AI detectors, automated proctoring, digital surveillance. Ian strongly rejects that approach. “Surveillance teaches students their instructor doesn’t trust them. It creates an adversarial environment that hurts learning,” he said. Instead, he calls for open dialogue with the focus on the conversations between faculty and students about AI’s limits, risks, and ethics.

A fight still ahead

Google may have paused its “homework help” button, but Ian stresses that it is far from over. Other companies are embedding AI into browsers and productivity tools at breakneck speed. The gold rush for market share, he warns, is being prioritized over pedagogy. “This isn’t about helping students. It’s about profits,” he said. “If we want an ethical future for education, we need to push back together.”

Get to know BCIT Library, including resources available to students, faculty, and staff.